Fair Hiring 2.0: Smart Practices to Counter Unconscious & AI Bias

It’s one thing to say your organization is committed to inclusive hiring. It’s quite another to ensure your practices actually deliver on that promise.

In today’s hiring landscape, bias shows up in two powerful ways: through human decisions, and through the AI-driven tools we increasingly rely on. If we want to build truly inclusive and equitable workplaces, we have to tackle both.

Inclusive hiring remains a hot topic for good reason. McKinsey’s research consistently shows that companies with diverse talent outperform peers on profitability and innovation (McKinsey, 2023 – Diversity Matters Even More: The Case for Holistic Impact).

But bias—whether unconscious or baked into algorithms—can quietly derail even the best intentions. Let’s talk about what “Fair Hiring 2.0” looks like today—and what you can do to make sure your processes drive real progress.

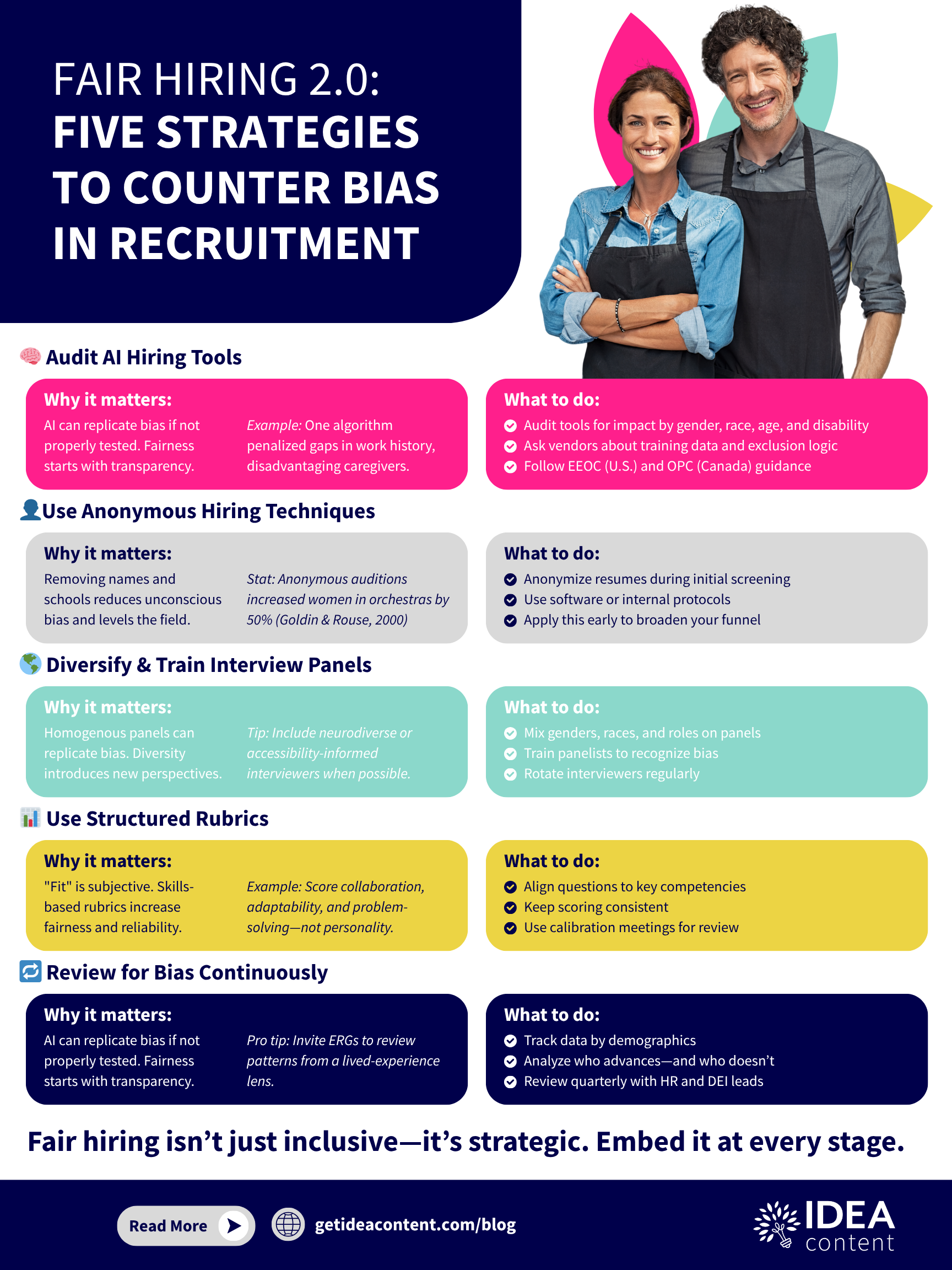

Audit Your Hiring Algorithms

AI and automated screening tools are now common in recruitment. But studies have shown they can reinforce bias if not carefully audited. For example, Frida Polli’s Harvard Business Review article explains how AI tools trained on historical data can replicate past discrimination—unless deliberately countered.

Newer insights from Bhaskar Chakravorti in Harvard Business Review (2025) emphasize the importance of proactive guardrails: from requiring algorithm explainability to regularly testing outcomes for differential impact by race, gender, and disability.

Bottom line: Conduct regular audits of your tools. Ask vendors tough questions about training data, fairness metrics, and transparency.

Embrace Anonymous Hiring Where Possible

Anonymous Hiring—removing names, schools, or demographic clues from applications—remains a powerful tool to reduce unconscious bias. A study in Science found that anonymized processes led to more women being selected for symphony orchestra positions (Goldin & Rouse, 2000).

While it’s not always feasible for every role, applying anonymous screening at early stages can help broaden your candidate pipeline.

Use Diverse Interview Panels

Who conducts your interviews matters. Diverse panels help surface different perspectives—and counter the risk of groupthink or affinity bias.

Research shows that panels with gender and racial diversity are more likely to fairly assess diverse candidates (Harvard Business Review, 2021). Make this a formal part of your hiring process design.

Apply Structured Rubrics for Bias Reviews

Unstructured interviews are fertile ground for bias. Instead, use clear, skills-based rubrics aligned to role requirements—and train interviewers to apply them consistently.

Structured processes not only improve fairness—they’re proven to better predict job performance.

Final Thought

If you’re still using hiring practices designed in 2010, it’s time for an upgrade. The mix of human and algorithmic bias demands that we all get smarter, faster—and more accountable—in how we design hiring systems.

Measure it. Audit it. Train for it. And remember: building an inclusive workforce starts long before onboarding. It starts with who you choose to let through the door.

IDEA Content is here to help with making your workplace more inclusive. We have hundreds of communications templates, policy templates, and ready-to-use toolkits designed to make your life easier. Become a free subscriber today (yes, it’s really free) to check out a selection of our offerings. Learn more